Login Servers

S4 has a new set of login severs:-

login1.sciama.icg.port.ac.uk – also supports NoMachine

login2.sciama.icg.port.ac.uk – also supports NoMachine

login3.sciama.icg.port.ac.uk – also supports X2Go

login4.sciama.icg.port.ac.uk

login5.sciama.icg.port.ac.uk – also supports X2Go

login6.sciama.icg.port.ac.uk

login7.sciama.icg.port.ac.uk

login8.sciama.icg.port.ac.uk

Initially use login1. Your ssh keys used in the old environment should also work in the new.

If you prefer to log in using a Remote Linux Desktop then follow the instructions for NoMachine here. Alternatively to use X2Go follow the instructions here.

Modules

Once you have logged in you will notice errors generated because of the modules set in your old environment. Remove any “special” modules commands you have on your .bashrc. Then copy the new default .modules file into your $HOME :-

> mv $HOME/.modules $HOME/module.old

> cp /opt/apps/etc/modules/modules.default $HOME/.modules

Once the old modules are removed you can re-add new ones. The “module avail” command should work as usual. Some modules will be missing. As you can image over the last five years many packages have been installed that are no longer used. We have decided to create a new module tree with only the modules that are currently needed. If a module you need is missing then please email “icg-computing@port.ac.uk” .

There is currently a bug with the modules command. The “initadd” function is broken so modules cannot be saved between sessions. As a work around please use the add function as normal but also add the following line to your .bashrc

source .modules

The actual modules are now stored under /opt/apps/pkgs . You may need to change any hardcoded paths you have in scripts and programs.

If you are using home grown “executables” then I suggest you recompile everything.

Python / Anaconda

As usual a number of different Python versions are installed under modules:-

anaconda/2019.03 – Based on 2.7.16.

anaconda3/2019.03 – Based on 3.7.3

cython/2.7.16 – This has (mostly) the same modules as the 2.7.8 tree on the old Sciama.

cpython/2.7.13

cpython/3.6.1

cpython/3.7.1

SLURM

The queuing system has also changed. We have moved from using Torque / Maui to the defacto standard for academic supercomputers called SLURM. All your job scripts will need to be modified but it should be relatively easy.

“queues” have been replaced by “partitions” . The new partitions:-

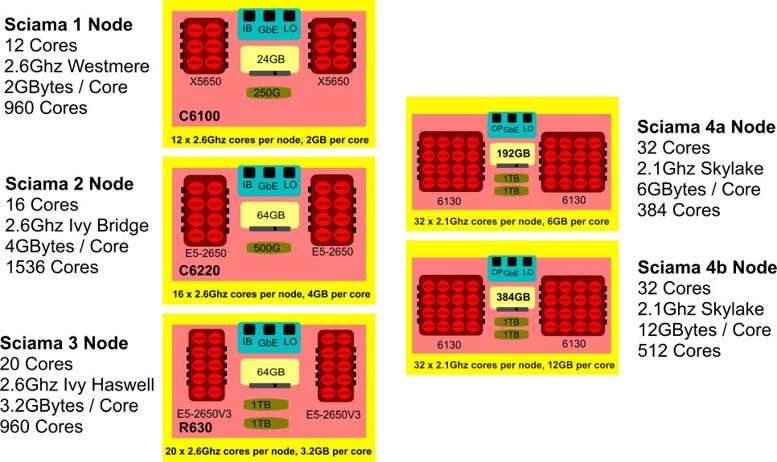

| Partition (queue) | Cores per node | Memory per core | No. of nodes | No. of cores |

| Sciama2.q | 16 | 4G | 95 | 1520 |

| Sciama3.q | 20 | 3.2G | 48 | 960 |

| Sciama4.q | 32 | 6G | 12 | 384 |

| Sciama4-12.q | 32 | 12G | 16 | 512 |

| Himem.q | 16 | 512G total | 1 | 16 |

The sciama1.q hardware will be decommissioned.

The other syntax change that is less obvious is:-

#PBS -l nodes=16:ppn=16

changes to

#SBATCH --nodes=8 #SBATCH --ntasks=256

which is 8 nodes of 32 cores.

More SLURM information can be found here.