While SCIAMA does not provide any local backup storage for important data, it is now possible to move your important data directly onto a cloud service for long-term storage and back for further processing. A good choice would be GoogleDrive, for which every university member has an account with unlimited storage capacity, but besides this various other services are supported (but not all tested yet): e.g. Amazon Drive, Amazon S3, MS OneDrive, DropBox.

The following sections provide instructions on how to do so using GoogleDrive as an example.

Loading/configuring rclone

To topWe use rclone to access and modify the remote cloud drive (e.g. move data). This is provided by the rclone module on SCIAMA. To use it, simply load the cpython/2.7.16 or cpython/3.8.3 module on the command line using:

module load cpython/2.7.16 If you haven’t done so already, you have to configure your cloud storage now. Simply execute

rclone config Select (n) to configure a new storage or edit an existing one by selecting (e). In the former case, you are now asked to provide a name for this config (This will be the name of the ‘drive’ so you may want to keep it short and avoid special characters/white spaces in it, in this example ‘test_drive’).

name> test_drive You are then asked to select the type of storage (in this example (9) for Google Drive):

Type of storage to configure.

Choose a number from below, or type in your own value

1 / Amazon Drive

\ "amazon cloud drive"

2 / Amazon S3 (also Dreamhost, Ceph, Minio)

\ "s3"

3 / Backblaze B2

\ "b2"

4 / Box

\ "box"

5 / Dropbox

\ "dropbox"

6 / Encrypt/Decrypt a remote

\ "crypt"

7 / FTP Connection

\ "ftp"

8 / Google Cloud Storage (this is not Google Drive)

\ "google cloud storage"

9 / Google Drive

\ "drive"

10 / Hubic

\ "hubic"

11 / Local Disk

\ "local"

12 / Microsoft Azure Blob Storage

\ "azureblob"

13 / Microsoft OneDrive

\ "onedrive"

14 / Openstack Swift (Rackspace Cloud Files, Memset Memstore, OVH)

\ "swift"

15 / Pcloud

\ "pcloud"

16 / QingClound Object Storage

\ "qingstor"

17 / SSH/SFTP Connection

\ "sftp"

18 / Webdav

\ "webdav"

19 / Yandex Disk

\ "yandex"

20 / http Connection

\ "http"

Storage> 9

Leave the next two questions about the Google Client Application Id and Secret blank (i.e. hit <RETURN> )

name> Google Application Client Id - leave blank normally.

client_id>

Google Application Client Secret - leave blank normally.

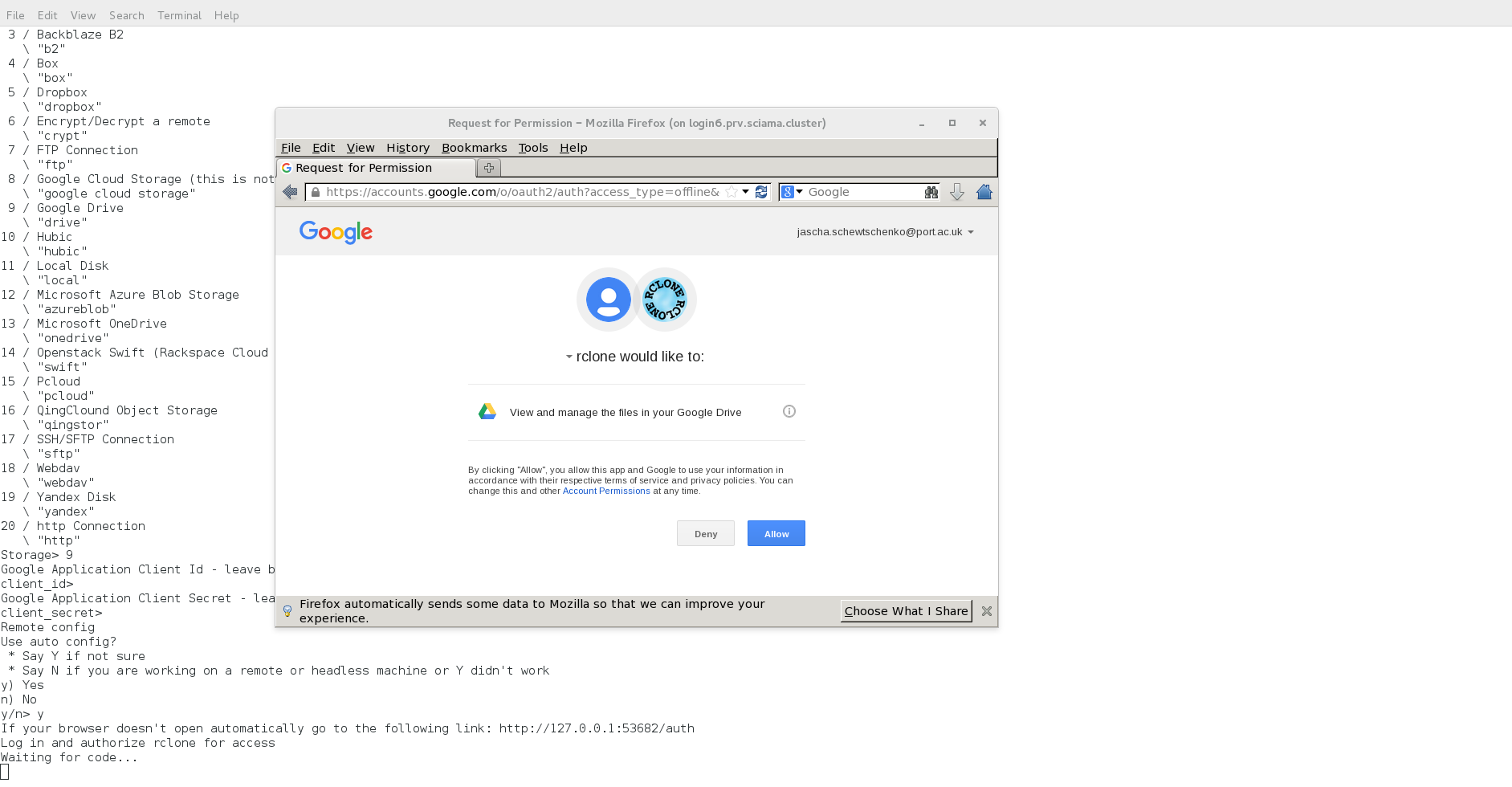

client_secret> Then use the autoconfig to tie the drive with your account (a browser window with a google login should open where you enter your credentials and allow the access

Once this is done successfully, you have to decide whether this should become a team drive or not (in this example, we chose against it):

Configure this as a team drive?

y) Yes

n) No

y/n> n Finally confirm all the settings and you finished the configuration. Your (Google Drive) storage should be listed now on top of the output of the main rclone menu alongside all your other configured storages.

Using remote storage with rclone

To toprclone help To access and manipulate the remote storage filesystem, you have to use the rclone tool. There is a wide variety of commands available. The following is a list of those probably most useful for our users and their bash equivalents(for more commands and arguments, check ‘rconfig help’)

cat- same as in bash; Concatenates any files and sends them to stdout

copyto- in bash:

cp -r; copies files/directories from source to destination delete- in bash:

rm -r; deletes content of path ls,lsd,lsl- in bash:

ls, ls -d, ls -l; lists content of path mkdir- same as in bash; creates directory

moveto- in bash:

mv; moves files or directories from source to destination rcat- in bash: ‘>’; copies stdout into file

rmdir- same as in bash; removes empty directory

size- in bash: ‘du -s’; Prints the total size and number of objects in remote:path

sync- similar to rsync; Makes source and dest identical, modifying destination only.

Here an example for creating a directory on the remote storage in our example from the previous section and copying some files to and from it and deleting everything again on the remote storage:

rclone mkdir test_drive:my_dir

rclone copyto ~/some_file.dat test_drive:new_dir/

rclone copyto test_drive:new_dir/some_file.dat copy.dat

rclone delete test_drive:new_dir